Introduction

We know 100,000 DB Requests/Second Sounds impossible… but Node.js makes it look easy!

In a world where speed and efficiency are paramount, a web application that lags or crashes can easily lose customers.

Whether you're running a microservice or a global enterprise level application, your system needs to handle massive traffic efficiently, especially when scaling to manage millions of users.

Handling huge data volumes and ensuring backend performance may seem daunting, but companies like Netflix, Amazon, and LinkedIn make it look effortless. How?

The answer lies in the power of Node.js

Over the years, Node.js has gained immense popularity because of its effective handling of concurrency and scalability. It’s not just about the language—it’s about how Node.js leverages key features, like non-blocking I/O and event-driven architecture, to juggle thousands of requests with ease.

Let's dive deeper into what are concurrency and scalability issues, and how Node.js handles them efficiently.

How do concurrency and scalability issues Impact your setup?

If concurrency and scalability aren't properly handled, your web application can suffer from slow response times, increased downtime, and even crashes during peak traffic. In the fast-paced digital world, where users expect instant responses, failing to manage these issues can lead to poor user experiences and business losses.

Concurrency occurs when multiple tasks or requests need to be handled simultaneously. For example, a web server must handle hundreds or even thousands of user requests at once. If your server processes each request one at a time, users will experience delays—like waiting in line at a busy coffee shop where only one barista is working.

Scalability is the ability of your application to grow with demand. As your user base expands, can your app handle more traffic without crashing or slowing down? Think of scalability as your coffee shop being able to add more baristas to keep up with the line of customers.

At the core of concurrency is the idea of handling multiple tasks simultaneously without bottlenecks. For a web application, these tasks include reading files, querying databases, or serving web pages. Traditional server-side languages like PHP or Ruby handle concurrency by creating a new thread for each request. However, this method consumes a lot of memory, and scaling can become expensive. Which brings us to leverage Node.js to handle concurrency efficiently.

How does Node.js handle concurrency?

Node.js approaches concurrency differently, using an event-driven, non-blocking architecture. Let’s break down these key concepts:

Single-Threaded Event Loop

Unlike many other programming environments that rely on multiple threads to handle concurrent tasks, Node.js runs on a single thread. You might wonder: how can a single thread possibly handle thousands of requests simultaneously? The answer is the event loop. This event loop processes tasks like I/O operations (reading files, querying databases) asynchronously. Rather than blocking the thread and waiting for these tasks to complete, Node.js continues executing other code.

Here’s how the event loop works:

- When a request comes into the server (e.g., an HTTP request or a file read operation), Node.js places it in the event loop.

- Instead of waiting for that request to be completed, Node.js immediately moves on to the next task, such as handling another request.

- Once the I/O operation finishes the task (e.g., reading data from a file or database), the event loop processes the callback associated with that task.

Non-blocking I/O

Node.js uses non-blocking I/O operations, meaning that time-consuming tasks like reading from a file or querying a database don’t stop other tasks from being executed. Instead, Node.js relies on callbacks and promises to handle these operations when they're ready. Simultaneously, it can start input/output tasks and continue executing other code while it waits for a response.

For instance, in traditional systems where input/output operations block the application (when a request to read a file is made), the application stops and waits for the file to be read before continuing with other tasks. In Node.js, however, the file read operation is offloaded to the background, allowing the event loop to continue handling other tasks concurrently. Once the file is read, Node.js performs the necessary actions to complete the task.

This non-blocking nature gives Node.js its ability to handle large volumes of simultaneous connections, making it highly efficient for tasks like serving APIs or managing real-time data streams.

- Callbacks A callback is a function that is passed as an argument to another function and is executed after the completion of an asynchronous task. Example: When querying a database, Node.js will not pause to wait for the result. Instead, a callback function is provided, which is executed once the database returns a response.

- Promises are another way to handle asynchronous tasks in a more structured manner, providing better error handling and chaining of asynchronous operations. Promises allow you to work with asynchronous code without nesting callbacks, making the code easier to read.

- Async/Await is a modern syntax built on top of promises that makes reading and writing asynchronous code feel like synchronous code, and prevents problems like deeply nested callbacks. It greatly simplifies error handling and code flow.

libuv Library

Behind the scenes, Node.js leverages the libuv library to provide an event loop and manage asynchronous I/O operations, Libuv handles the thread pool and supports asynchronous tasks like networking, file system operations, and timers.

Libuv works in two ways:

- For I/O-bound tasks (e.g., reading from a network or file), it uses the event loop to handle them asynchronously.

- For CPU-bound tasks, like complex computations, it delegates them to a thread pool to avoid blocking the event loop.

Essentially, libuv makes sure that Node.js can handle concurrent I/O operations efficiently, while offloading heavier, CPU-bound tasks to separate threads when necessary.

Furthermore, the libuv library guarantees that background tasks run concurrently, enabling the event loop to execute JavaScript code seamlessly. Once the task is completed in the background, the event loop picks it up and invokes the necessary callback or promise.

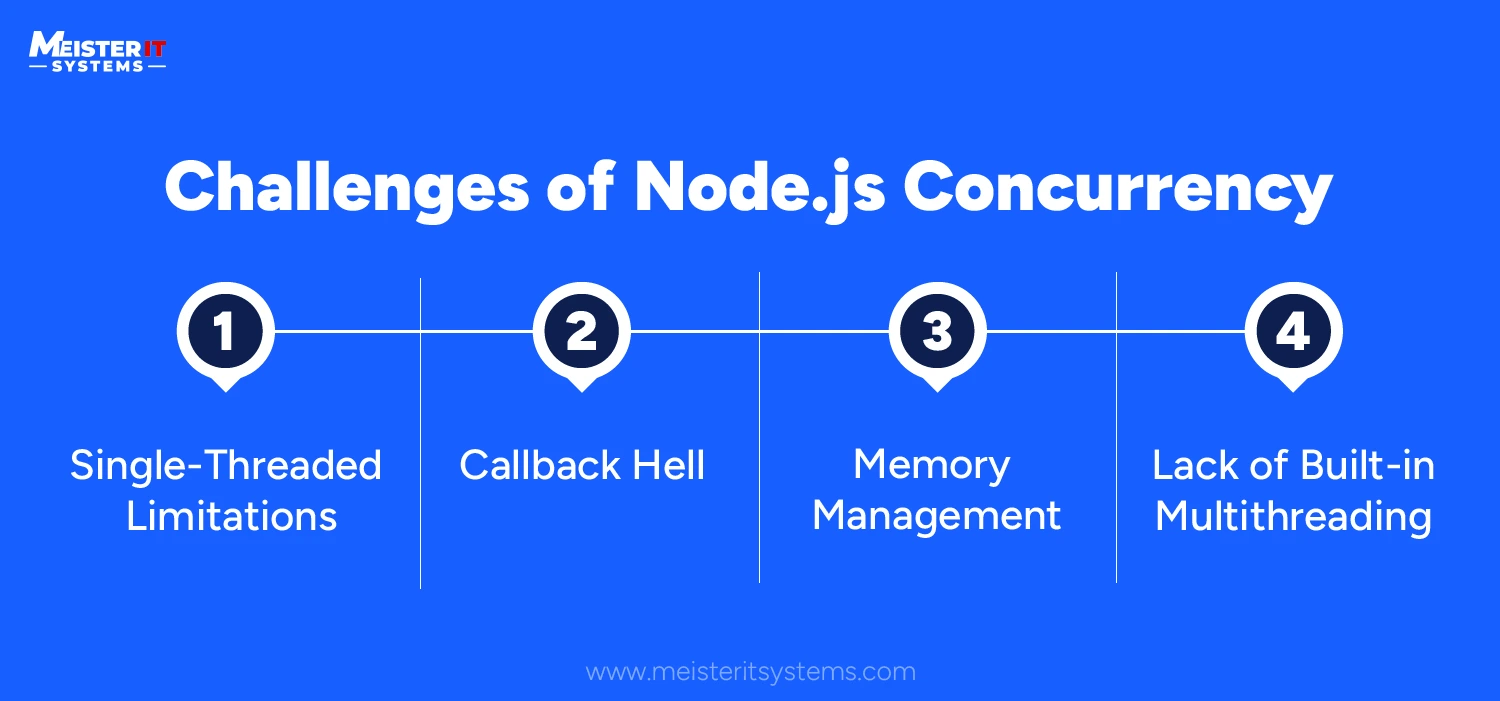

Challenges of Node.js Concurrency

While Node.js's concurrency model is efficient, it does come with some challenges. Below are common issues, along with practical solutions to prevent them:

Single-Threaded Limitations

Challenge

Node.js uses a single-threaded event loop, which is ideal for handling multiple concurrent I/O operations but struggles with CPU-intensive tasks. When heavy computations block the event loop, the server may become unresponsive, resulting in slow response times or timeouts for other users.

Solution

To address this, developers can offload CPU-intensive tasks to worker threads or external services. The Worker Threads module in Node.js allows you to create multiple threads to run heavy computations in parallel without causing the main event loop to be blocked.

Callback Hell

Challenge

Node.js heavily relies on callbacks to handle asynchronous operations, which can result in deeply nested code, also known as "callback hell." This makes the code challenging to read, maintain, and debug.

Solution

Promises and the async/await syntax make it easier to handle asynchronous operations. Promises enable the chaining of asynchronous tasks, whereas async/await flattens asynchronous code, making it more sequential and easier to maintain.

Memory Management

Challenge

Although Node.js is designed to handle asynchronous operations efficiently, managing memory in large-scale applications can be difficult. Memory leaks occur when data is stored but not properly released, resulting in performance degradation and crashes.

Solution

Developers should monitor and optimise memory usage regularly using profiling tools such as Node.js Profiler or Chrome DevTools. Identifying memory leaks and optimising data structures can greatly improve memory management in Node.js applications.

Lack of Built-in Multithreading

Challenge

While Node.js is designed for non-blocking I/O, it lacks built-in multithreading capabilities for running multiple tasks concurrently on different CPU cores. This can be a limitation when developing applications that require a lot of parallel processing.

Solution

As previously mentioned, Node.js now supports worker threads for CPU-bound tasks. Additionally, tools such as cluster modules or external load balancers (e.g., Nginx) can be used to distribute incoming requests across multiple Node.js processes, resulting in better system resource utilisation.

Scalability: Expanding as You Grow

While concurrency deals with handling multiple tasks at once, scalability refers to how well your app can grow to handle an increasing number of users or tasks. As your web application gains more users, you want it to handle the increased load without slowing down or crashing.

Node.js offers several tools and techniques to improve scalability:

1. Clustering

Node.js is single-threaded by default, but with clustering, you can create multiple instances (or workers) of your Node.js app that run simultaneously. Each worker handles incoming requests independently, allowing you to take advantage of multi-core processors. All workers share the same port and split the workload, enabling the app to handle more requests as your user base grows. Tools like NGINX and HAProxy are widely used for effective load balancing, and intelligently routing traffic to various server instances based on real-time performance metrics. This approach significantly enhances both responsiveness and availability.

2. Horizontal Scaling

Node.js works well with horizontal scaling, which means adding more servers to handle the increased load. Cloud platforms like AWS or Azure, and tool like Node.js Cluster allow developers to easily scale their Node.js application by adding more instances during peak times and reducing them when demand is lower.

3. Load Balancing

A load balancer (such as Nginx or AWS ELB) distributes incoming traffic across multiple servers. This ensures that no single server becomes overwhelmed, enabling your app to handle high volumes of traffic efficiently.

4. Caching

By using caching tools like Redis or Memcached, apps can store frequently accessed data in memory. This reduces the load on the database and speeds up response times, allowing app to scale better under heavy traffic.

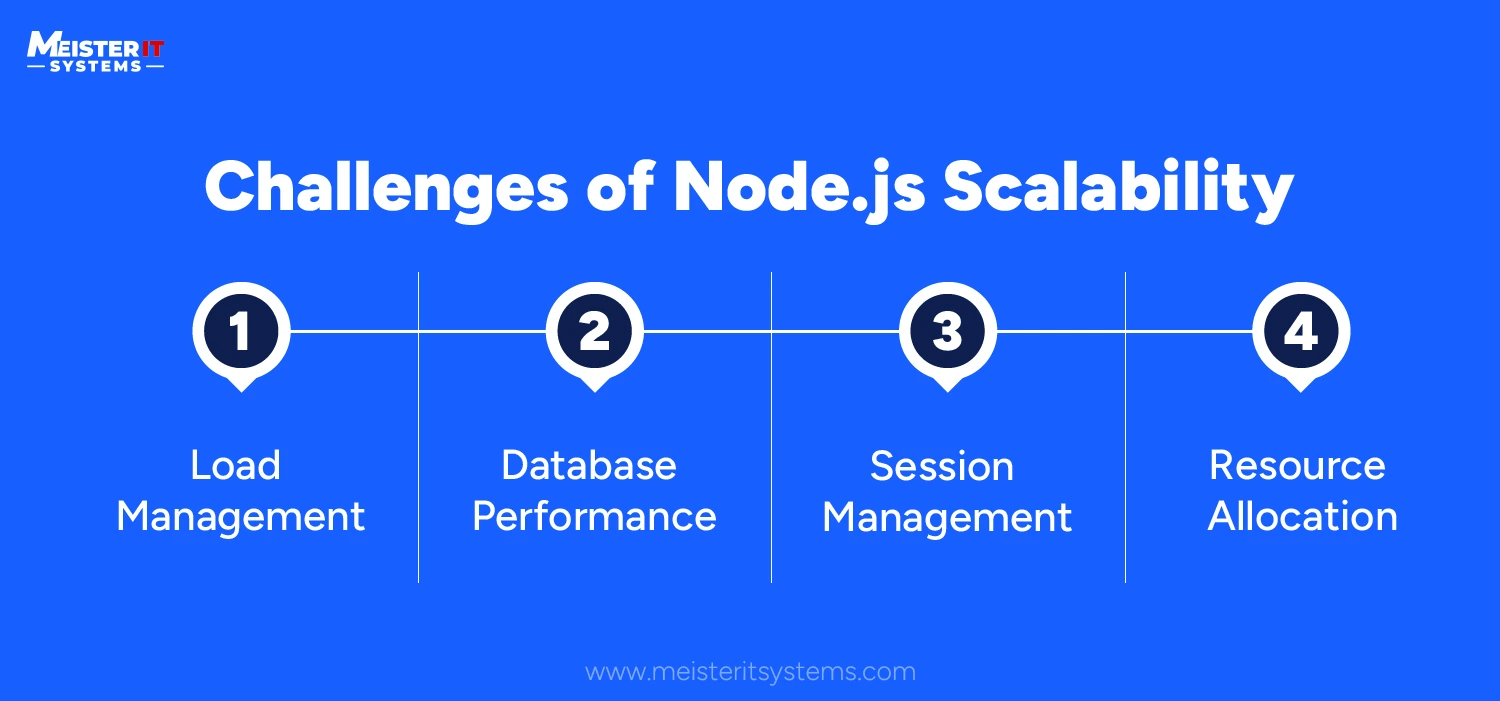

Challenges of Node.js Scalability

Scalability in Node.js web development presents several unique challenges that can impact application performance and user experience. Here are some challenges:

Load Management: As user demand escalates, Node.js servers can become overwhelmed, resulting in slow response times or outages.

Solution:Implement load balancing to distribute traffic evenly across multiple Node.js instances, preventing any single server from becoming a bottleneck and ensuring optimal performance.

Database Performance: As Node.js applications scale, database queries may slow due to increased data volume and concurrent requests, which can hinder overall performance.

Solution: Optimise database queries, employ indexing, and consider database sharding or replication to enhance performance and ensure that the database can handle the increased load.

Session Management: Managing user sessions can become challenging with multiple Node.js instances, leading to potential inconsistencies in session data.

Solution: Use centralised session management solutions, such as Redis, to maintain consistent session data across all Node.js servers, ensuring users have a seamless experience.

Resource Allocation: Inefficient resource allocation can waste computational power or lead to insufficient resources for handling requests.

Solution: Monitor application performance regularly and utilise auto-scaling features in cloud services to dynamically adjust resources based on real-time demand, ensuring your Node.js application remains responsive during peak loads.

By proactively addressing these scalability challenges, developers can enhance the performance and reliability of their Node.js applications, ensuring they can effectively meet growing user demands.

Real-World Node.js backends defying Concurrency and Scalability challenges

Node.js powers some of the world’s prominent companies, such as Netflix, Amazon, and LinkedIn, demonstrating its effectiveness in managing concurrency and scalability:

Netflix: By switching to Node.js, Netflix significantly reduced startup time and handled millions of requests concurrently, greatly enhancing the user experience.

Amazon: Amazon uses Node.js for its dynamic web applications, allowing for quick data processing and efficient API responses. This enables the platform to handle a high volume of transactions simultaneously, ensuring a seamless shopping experience for millions of users.

LinkedIn: LinkedIn transitioned its backend services to Node.js, allowing it to manage numerous connections with fewer servers. This shift improved both performance and cost efficiency, enabling faster updates and a better overall user experience.

These examples demonstrate how Node.js can efficiently support large-scale applications while optimising performance and resource usage.

Conclusion

Node.js offers a powerful solution for handling both concurrency and scalability in backend systems. Its architecture, which processes multiple requests without blocking events, enables it to handle a large volume of requests efficiently, making it a great option for modern applications needing speed and flexibility.

Looking to build a Node.js application that can handle heavy loads and scale with your business needs? Our team at MeisterIT Systems, with extensive experience in Node.js development, is ready to provide tailored solutions to enhance the efficiency and scalability of your backend. Reach out to our team to learn how we can optimise your Node.js development.

Frequently Asked Questions

What are the key benefits of using Node.js when addressing concurrency and scalability?

TNode.js's non-blocking I/O model and event-driven architecture allow it to handle thousands of concurrent requests efficiently. This makes it ideal for real-time applications and high-traffic websites, providing fast response times and optimal resource utilisation.

How does Node.js manage multiple requests without getting stuck?

Node.js uses an event loop to manage asynchronous operations. Instead of blocking the execution thread while waiting for tasks like database queries or file reads to complete, it continues processing other requests. Once the I/O operation is finished, the event loop processes the corresponding callback.

Is Node.js effective for CPU-intensive tasks?

While Node.js excels at handling I/O-bound tasks, it can struggle with CPU-intensive operations due to its single-threaded nature. To mitigate this, developers can use the Worker Threads module to offload heavy computations to separate threads, ensuring the main event loop remains responsive.

Which strategies can you employ to ensure scalability in your Node.js application?

To enhance scalability, implement load balancing to distribute incoming requests across multiple instances, use the Cluster module for horizontal scaling, and consider caching frequently accessed data. Regularly monitoring and optimising are also essential to sustaining performance as user demand increases.